AI Chatbots: Friend or Foe?

A recent Observer article posed the question ‘Could a bot be your best pal?’. This raises several interesting issues, positive and negative.

The concept behind an AI chatbot is not new. It is a logical progression from talking dolls and teddy bears and the Tamagotchi of the 1990s. Since the beginning of time, people have conversed with their pets, imaginary friends and deities. Chatbots take the idea a significant step forward by offering a truly interactive experience with added value input to the conversation based on AI learning models.

This can offer positive benefits by simulating friendships with people who are lonely or who have mental health problems. Creatives can use them to stimulate and develop ideas.

As with many new technologies, risks of intrusion must be evaluated and addressed. Not all intrusions present a threat; Governments could use them to collect data to predict and prevent crime by identifying real or potentially criminal behaviour, as they no doubt already do with our daily communications. An obvious example is phrases containing keywords such as terrorist, bomb and assassinate.

On the other hand, they present many possible negative uses, which could prove harmful, subversive, or illegal. The chatbot could be manipulated to introduce negative thoughts by feigning indifference, aggression or poor ideas to change the thought patterns and behaviour of susceptible and vulnerable people. The same groups could be targeted to enter into fake human relationships and other scams, encouraging them to share personal and financial information.

Perhaps the most dangerous threat is from governments identifying individuals whose views, while legitimate, conflict with their own and which they wish to suppress.

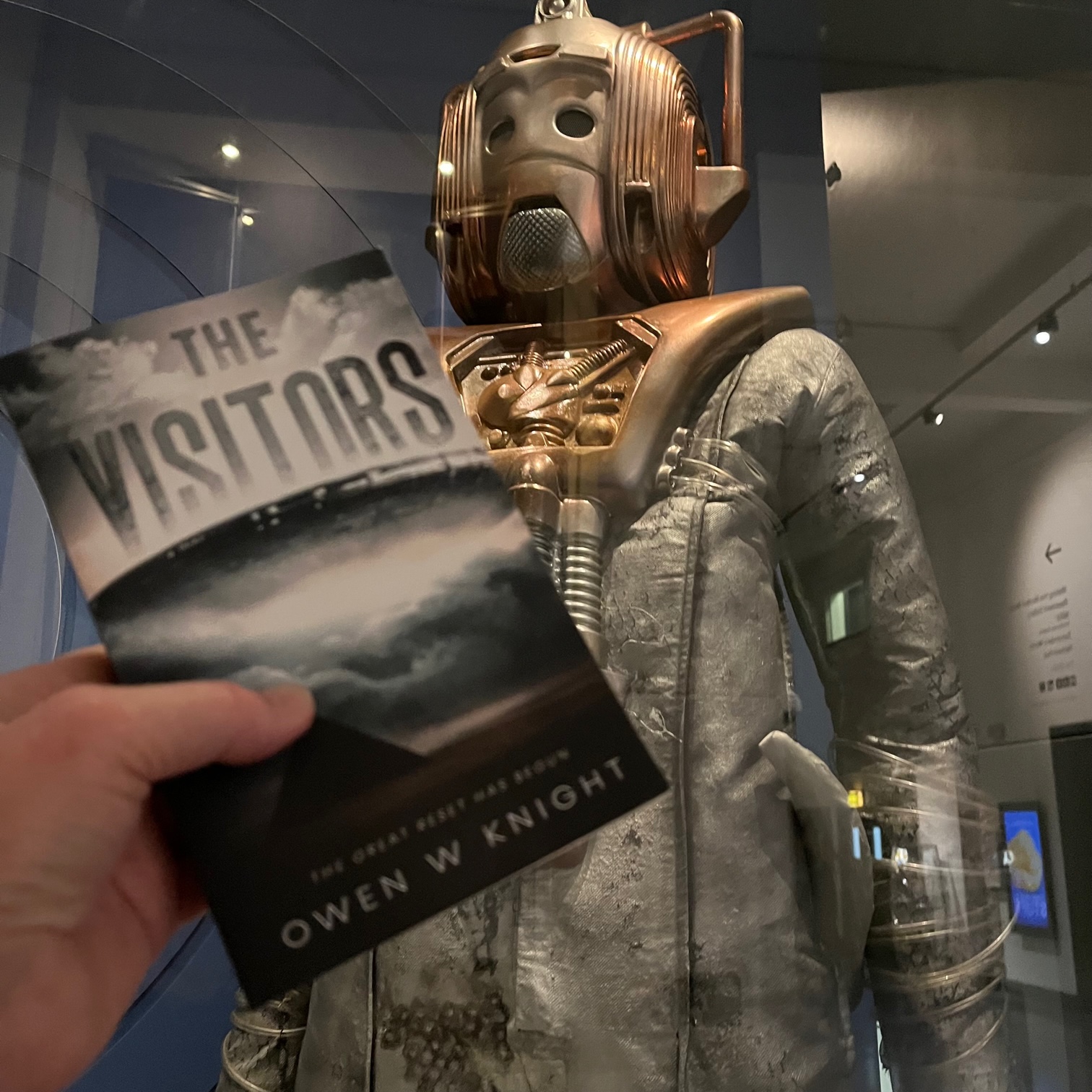

I am sure we will hear a lot more on this subject. In the meantime, it provides promising material for writers of speculative fiction.